Big data can tell us what’s wrong, not what’s right.

Nassim Taleb

Nassim N. Taleb is the author of Antifragile, which this piece is adapted from. He is a former derivatives trader who became a scholar and philosophical essayist. Taleb is currently a distinguished Professor of risk engineering at New York University’s Polytechnic Institute. His works focus on decision making under uncertainty, in other words “what to do in a world we don’t understand.” His other book isThe Black Swan: The Impact of the Highly Improbable.

Nassim N. Taleb is the author of Antifragile, which this piece is adapted from. He is a former derivatives trader who became a scholar and philosophical essayist. Taleb is currently a distinguished Professor of risk engineering at New York University’s Polytechnic Institute. His works focus on decision making under uncertainty, in other words “what to do in a world we don’t understand.” His other book isThe Black Swan: The Impact of the Highly Improbable.

We’re more fooled by noise than ever before, and it’s because of a nasty phenomenon called “big data.” With big data, researchers have brought cherry-picking to an industrial level.

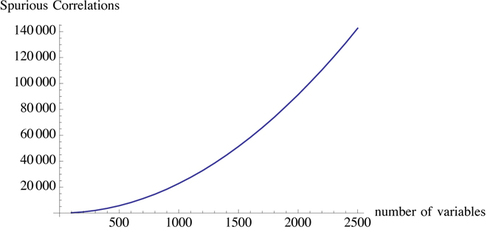

Modernity provides too many variables, but too little data per variable. So the spurious relationships grow much, much faster than real information.

In other words: Big data may mean more information, but it also means more false information.

Just like bankers who own a free option — where they make the profits and transfer losses to others – researchers have the ability to pick whatever statistics confirm their beliefs (or show good results) … and then ditch the rest.

Big-data researchers have the option to stop doing their research once they have the right result. In options language: The researcher gets the “upside” and truth gets the “downside.” It makes him antifragile, that is, capable of benefiting from complexity and uncertainty — and at the expense of others.

But beyond that, big data means anyone can find fake statistical relationships, since the spurious rises to the surface. This is because in large data sets, large deviations are vastly more attributable to variance (or noise) than to information (or signal). It’s a property of sampling: In real life there is no cherry-picking, but on the researcher’s computer, there is. Large deviations are likely to be bogus.

We used to have protections in place for this kind of thing, but big data makes spurious claims even more tempting. And fewer and fewer papers today have results that replicate: Not only is it hard to get funding for repeat studies, but this kind of research doesn’t make anyone a hero. Despite claims to advance knowledge, you can hardly trust statistically oriented sciences or empirical studies these days.

This is not all bad news though: If such studies cannot be used to confirm, they can be effectively used to debunk — to tell us what’s wrong with a theory, not whether a theory is right.

Another issue with big data is the distinction between real life and libraries. Because of excess data as compared to real signals, someone looking at history from the vantage point of a library will necessarily find many more spurious relationships than one who sees matters in the making; he will be duped by more epiphenomena. Even experiments can be marred with bias, especially when researchers hide failed attempts or formulate a hypothesis after the results — thus fitting the hypothesis to the experiment (though the bias is smaller there).

The problem with big data, in fact, is not unlike the problem with observational studies in medical research. In observational studies, statistical relationships are examined on the researcher’s computer. In double-blind cohort experiments, however, information is extracted in a way that mimics real life. The former produces all manner of results that tend to be spurious (as last computed by John Ioannidis) more than eight times out of 10.

Yet these observational studies get reported in the media and in some scientific journals. (Thankfully, they’re not accepted by the Food and Drug Administration). Stan Young, an activist against spurious statistics, and I found a genetics-based study claiming significance from statistical data even in the reputable New England Journal of Medicine — where the results, according to us, were no better than random.

And speaking of genetics, why haven’t we found much of significance in the dozen or so years since we’ve decoded the human genome?

Well, if I generate (by simulation) a set of 200 variables — completely random and totally unrelated to each other — with about 1,000 data points for each, then it would be near impossible not to find in it a certain number of “significant” correlations of sorts. But these correlations would be entirely spurious. And while there are techniques to control the cherry-picking (such as the Bonferroni adjustment), they don’t catch the culprits — much as regulation didn’t stop insiders from gaming the system. You can’t really police researchers, particularly when they are free agents toying with the large data available on the web.

I am not saying here that there is no information in big data. There is plenty of information. The problem — the central issue — is that the needle comes in an increasingly larger haystack.

Modernity provides too many variables, but too little data per variable. So the spurious relationships grow much, much faster than real information.

In other words: Big data may mean more information, but it also means more false information.

Just like bankers who own a free option — where they make the profits and transfer losses to others – researchers have the ability to pick whatever statistics confirm their beliefs (or show good results) … and then ditch the rest.

Big-data researchers have the option to stop doing their research once they have the right result. In options language: The researcher gets the “upside” and truth gets the “downside.” It makes him antifragile, that is, capable of benefiting from complexity and uncertainty — and at the expense of others.

But beyond that, big data means anyone can find fake statistical relationships, since the spurious rises to the surface. This is because in large data sets, large deviations are vastly more attributable to variance (or noise) than to information (or signal). It’s a property of sampling: In real life there is no cherry-picking, but on the researcher’s computer, there is. Large deviations are likely to be bogus.

We used to have protections in place for this kind of thing, but big data makes spurious claims even more tempting. And fewer and fewer papers today have results that replicate: Not only is it hard to get funding for repeat studies, but this kind of research doesn’t make anyone a hero. Despite claims to advance knowledge, you can hardly trust statistically oriented sciences or empirical studies these days.

This is not all bad news though: If such studies cannot be used to confirm, they can be effectively used to debunk — to tell us what’s wrong with a theory, not whether a theory is right.

Another issue with big data is the distinction between real life and libraries. Because of excess data as compared to real signals, someone looking at history from the vantage point of a library will necessarily find many more spurious relationships than one who sees matters in the making; he will be duped by more epiphenomena. Even experiments can be marred with bias, especially when researchers hide failed attempts or formulate a hypothesis after the results — thus fitting the hypothesis to the experiment (though the bias is smaller there).

The problem with big data, in fact, is not unlike the problem with observational studies in medical research. In observational studies, statistical relationships are examined on the researcher’s computer. In double-blind cohort experiments, however, information is extracted in a way that mimics real life. The former produces all manner of results that tend to be spurious (as last computed by John Ioannidis) more than eight times out of 10.

Yet these observational studies get reported in the media and in some scientific journals. (Thankfully, they’re not accepted by the Food and Drug Administration). Stan Young, an activist against spurious statistics, and I found a genetics-based study claiming significance from statistical data even in the reputable New England Journal of Medicine — where the results, according to us, were no better than random.

And speaking of genetics, why haven’t we found much of significance in the dozen or so years since we’ve decoded the human genome?

Well, if I generate (by simulation) a set of 200 variables — completely random and totally unrelated to each other — with about 1,000 data points for each, then it would be near impossible not to find in it a certain number of “significant” correlations of sorts. But these correlations would be entirely spurious. And while there are techniques to control the cherry-picking (such as the Bonferroni adjustment), they don’t catch the culprits — much as regulation didn’t stop insiders from gaming the system. You can’t really police researchers, particularly when they are free agents toying with the large data available on the web.

I am not saying here that there is no information in big data. There is plenty of information. The problem — the central issue — is that the needle comes in an increasingly larger haystack.